What I would like you to do is to find a topic from this weeks readings that you were interested in and search the internet for material on that topic. You might, for example, find people who are doing research on the topic, you might find web pages that discuss the topic, you might find youtube clips that demonstrate something related to the topic, etc. What you find and use is pretty much up to you at this point. But use at least 3 sources.

Once you have completed your search and explorations, I would like you to say what your topic is, how exactly it fits into the chapter, and why you are interested in it. Next, I would like you to take the information you found related to your topic, integrate/synthesize it, and then write about it. At the end, please include working URLs for the three websites.

By now you all should be skilled at synthesizing the topical material you have obtained from the various web sites you visited. If you need a refresher please let me know.

Thanks,

This topical blog choice for this section, I chose Variable Ration (VR) scheduling. I chose this topic because, I found it interesting that this had the highest level of learning pattern involved with it. Variable Ration Scheduling, is a scheduling of reinforcement where a response is reinforced after a unpredictable number of responses. Usually reinforced between a range of responses. Gambling and lottery are two examples of this, but what I was more interested in was if such a pattern was able to be used in human learning.

With gambling and lottery it is, but I was thinking in other ways. Does this form of scheduled reinforcement emit a higher rate of learning for organisms like humans. Rather then the nonhuman organisms (ie. rats) that are used in experiments. As behavior and learning go hand in hand, it was not surprising to find that such a schedule of reinforcement does affect a human as well as a non human. However, through the readings I found that VR scheduling elicits a higher learning rate for non human organisms. Humans having been reinforced all their lives, are not naive rats so fixed interval(FI) works better to emit a better human learning behavioral response.

Sources:

http://psychology.about.com/od/vindex/g/def_variablerat.htm

http://www.ncbi.nlm.nih.gov/pmc/articles/PMC1338958/

http://alleydog.com/topics/learning_and_behavior.php

I decided to do my topical on the impact of reinforcement scedules on gambling habits and addictions. Obviously, when gambling, if you were continuously reinforced after every single time you played a hand in a card game or put a nickel into a slot machine you would get bored and even though you were winning money, you would have better reason to stop at some point because you would get tired of it. That’s why most methods of gambling, like slot machines, which is the example used most, ends up being put on a variable schedule. A variable schedule can be either a variable ratio (which is the organism would receive reinforcement after an average number of responses), or a variable interval (where the organism would receive reinforcement after an average amount of time. Long variable schedules are usually the most troublesome (and ususally the most used in gambling) because they elicit a steady response because that organism knows it will eventually receive the reinforcement, this then leads to the behavior being learned and becoming a habit, all the while the person is losing money.

There are many models that explain this behavior, the one that I was just explaining most likely explains the social-learning model, where the organism learns to gamble by reacting to scheduled reinforcement. Although there are more theories on why gambling can become so habitual and addicting in addition to knowing that one will be reinforced at SOME point. The cognitive behavioral model also gives explaination to why gambling can become so addictive. In addition to reinforcement schedules, levels of arousal also play a large part in the habituation of gambling. As the gambler is playing the game he becomes more and more aroused, either by excitement to win, or anxiety that he may lose everything, so he keeps playing until reinforcement occurs to ease the anxiety, which in turn becomes a cycle to achieve the same arousal and ease the same anxiety.

The last idea or concept that I learned about that makes gambling so addicting is the thought “I am special, or I am different” that can play a role in how often, how much money we spend, or how long we play a certain game. Not only are we taught this from an early age, that we, as individuals, are special, but it is a way of thinking to rationalize a behavior that is probably otherwise irrational. Those who set up games in casinos often times play into this thought process with their intermittent schedules by placing wins very close to each other right at the beginning of a game—this leads to the person thinking that they are perhaps different from others and are lucky, and encourages them to continue playing so that the same outcome will follow after spending longer time and spending more money at the same game.

http://home.vicnet.net.au/~ozideas/gambl.htm

http://www.problemgambling.ca/EN/ResourcesForProfessionals/Pages/AnIntroductiontoConceptualModelsofProblemGambling.aspx

http://www.limbicnutrition.com/blog/20-dont-shoot-the-dog/

I think this is an interesting topic because people are being positively reinforced for their actions in casinos, but in the end it appears that most people leave with aversive attitudes towards their game playing that night...

The topic I chose for this week is continuous reinforcement and intermittent reinforcement. Continuous reinforcement is termed as reinforcing the desired behavior every single time it occurs. This is used best during the first stages of learning in order to teach the strong association between the behavior and the response to that behavior.

Once the concept of continuous reinforcement is learned and set in, reinforcement is usually replaced with intermittent reinforcement. Intermittent reinforcement is reinforcement that is only given part of the times when the desired response is given. It is sometimes used instead of continuous reinforcement after the desired response has been conditioned by continuous reinforcement. This is because the reinforcer may want to eliminate the amount of reinforcements needed to persuade the expected response.

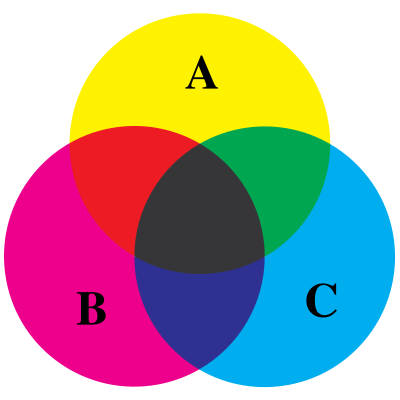

The four different schedules of intermittent reinforcement are: fixed-ratio, variable-ratio, fixed-interval, and variable-interval schedules.

Fixed-ratio: those schedules where a response is reinforced only after a specified number of responses. It makes a high and steady rate of responding with only a small pause after the deliver of the reinforcer. An example could be that a student will be reinforced after turning in four homework assignments.

Variable-ratio: occur when a response is reinforced after an unpredictable number of responses. This creates a high and steady rate of responding. An example could be a student will be reinforced after she turns in homework assignments every ten times.

Fixed-interval: schedules where the first response is reinforced only after a specified amount of time has elapsed. This causes high amounts of responding towards the end of an interval, but slower right after the delivery of the reinforcer. An example could be a student is reinforced when he or she turns in homework assignment every four days.

Variable-interval: occurs when a response is reinforced after an unpredictable amount of tie has gone by. This produces a slow, steady rate of response. An example could be that a student is reinforced after he or she turns in her homework once a week, but on different days of different weeks.

http://psychology.about.com/od/behavioralpsychology/a/schedules.htm

http://wik.ed.uiuc.edu/index.php/Intermittent_reinforcement

http://www.lifecircles-inc.com/Learningtheories/behaviorism/Skinner.html

Thanks for the explanation on the different types of intermittent reinforcement, I was still really confused about the definitions of the different types of intermittent reinforcement so your blog was really helpful.

Continuous reinforcement relates a lot to the dog video that we watched today in class. It really helps in training your animals or to get your child to elict a desired behavior.

I wanted to focus on the different types of intermittent reinforcements: fixed ratio, variable ratio, fixed interval, and variable interval.

Ratio is behavior based. It deals with how many times you perform a behavior before getting reinforced. It may either be fixed or variable. Fixed ratio is when there is a specific number of responses that must be given before getting reinforced. Variable ratio allows for a variation in the number of responses needed. I really like to use the slot machine examples when thinking about these. They make the most sense to me. Fixed ratio would be after every 7 pulls on the slot machine, you would win and be reinforced. Variable ratio would be getting reinforced every once in awhile. It may be on the 4th pull, and the next on the 10th pull. It makes the most sense to have slot machines on a variable ratio schedule. That way, people will keep playing because they don't know if the next pull could lead to a reinforcement.

Interval schedules are time based. They have a certain amount of time that has to pass in order to get reinforced. With slot machines, every 30 minutes could lead to a reinforcement. This would be a fixed interval schedule. The person's behavior would change with this type of schedule. If they know they are going to be reinforced every 30 minutes, they would possibly try to guard their area so they have the greatest change of being on the machine when the time comes to pull. Another example of this that I like is when people are working. I can easily apply this to my life and when I am working. When employees know when their boss or manager is going to come into the store, they will try to act more productive. When the boss is not there, employees may slack a little. That is one of the problems with the fixed interval schedule. It is not really a good measure of behavior, because the behavior will change. The variable interval schedules produce a steady rate of responses. They would receive the reinforcement after an average amount of time has passed. The person could be reinforced by pulling the handle after 20 minutes one time, then after 25 minutes the next time. It just has to be after an average amount of time. This can also be applied to a work situation. Even with an average amount of time passing before the boss comes, the employees will still change their behavior when the time comes near. They start to know the average time of when they come so that is when they change their behavior. This can be attributed to why a variable ratio schedule is the best. The workers do not know when the boss will be coming in, so they work hard all the time, and when they do come in, they will be reinforced for their behavior.

http://www.clickermagic.com/clicker_primer/clicker_p18.html

http://wik.ed.uiuc.edu/index.php/Intermittent_reinforcement

http://www.psychology.uiowa.edu/faculty/wasserman/glossary/schedules.html

I think it was a good idea to do a brief overview of multiple terms instead of just one for your topical blog. This section had a lot of information for us to read. Although did the same examples as the book, I think it was useful to read your post because there were so many things to read and remember in the text, that it was diffucult to recall terms to their defintions. Re-reading the same context in a smaller paragraph versus an entire chapter was helpful. I thought it was interesting that variable ratio was an average time set, and that we base it on our internal clocks, instead of keeping an exact time like a fixed interval.

After reading section 2.5, I really wanted to take a look at intermittent reinforcement. I know that obviously we can use continuous reinforcement, which is constantly reinforcing an organism. A more realistic way of reinforcing an organism is intermittent reinforcement, which is reinforcing an organism every now and then. Types of intermittent reinforcement that are popular are fixed ratio, variable ratio, fixed interval, and variable interval. After reading everything, I wanted to see how intermittent reinforcement works in the real world, so I found some examples of it.

Obviously there’s a lot of things we can you use intermittent reinforcement with. A good way to use it is with children. Shaping a child’s behavior can be challenging sometimes and to get the target behavior we have to be consistent with reinforcement. But, you can’t reinforce a child every time otherwise they will expect a reinforcer every time they perform the target behavior you’re looking for. The key is using is to reinforce the child every now and then for emitting the target behavior you want them to perform. This way they understand in order to get reinforced they will have to perform the desired behavior all the time to be reinforced and not just some of the time. The parent has to make sure they stay on track with their reinforcement and not give in, otherwise they will experience extinction and they will lose all the progress they made.

Another way intermittent reinforcement is used is in casinos. For example, slot machines people play can’t just let the player lose every time; otherwise they wouldn’t want to play the machines anymore. So gamblers have to be reinforced every now and then. So sometimes they will be small pay outs to gamblers of 2-10 times the amount you put, then even rarer yet 100 times the pay out, and an even rarer 1000 times the payout. So this keeps the gambler intent on playing to win, for the small chances they will have success. Slot machines usually keep 5 to 25% of their money and then pay out the rest in random winnings. Also, slot machines account for 70% of casinos earnings.

Intermittent reinforcement is also used with animals. At first when you are training animals you reinforce them every time they do a certain behavior, which is continuous reinforcement. Eventually you have to switch to intermittent reinforcement so the animal learns to continue the behavior without being reinforced every time.

So after reading all those examples, the main thing that is stressed when using intermittent reinforcement, is that you must be consistent with your reinforcement. If you are inconsistent the organisms will not understand what you want from them and it will lead to extinction. If you’re a parent you must not give in to your child tantrums or fits, otherwise the target behavior will not be learned.

Terms: extinction, reinforcement, target behavior, intermittent reinforcement, emit, fixed ratio, fixed interval, variable ratio, variable interval, organism.

http://www.media-cn.com/intermittent-reinforcement.html

http://www.outofthefog.net/CommonNonBehaviors/IntermittentReinforcement.html

http://www.psywww.com/intropsych/ch05_conditioning/intermittent_reinforcement.html

For this assignment I decided to talk about the schedules of reinforcement. Schedules of reinforcement are the precise rules that are used to present (or to remove) reinforces (or punishers) following a specified operant behavior. There are different schedules and within the different schedules they come with different slopes. This one thing allowed me to get confused and at the same time I was able to understand the four different types; VR, VI, FI, FR. All of which have their own complex way for explaining behaviors and when they are emitted or more than likely going to be emitted. Some have stair step patterns while others have scallop patterns.

These schedules are used to reinforce and the do increase or decrease a pleasurable or aversive behavior. It all depends on what you are looking at or what you want to change. It’s just like if people know when their boss is going to be around they are going to emit a working behavior when their boss shows up. By the boss showing up he elicits the workers to emit a behavior which is expected of them. When the boss leaves the workers slack off and no longer emit the behavior they are expected to do. This all can be measured and more than likely will have a scalloped pattern.

This is also the same with gambling or even skinners pigeons. When Skinner did his experiment with the pigeons he was able to record when they got the reward and what they did to get the reward. Also how many times did they peck at the disc before they got a reward? Everything was recorded and it was able to show a schedule of reinforcement.

http://www.psychology.uiowa.edu/faculty/wasserman/glossary/schedules.html

http://www.cehd.umn.edu/ceed/publications/tipsheets/preschoolbehaviortipsheets/schedule.pdf

http://www.youtube.com/watch?v=rst7dIQ4hL8

I decided to do my blog on post-reinforcement pauses to see if I could come up with a reason why they happen. I can hazard a guess at why, anywhere from perhaps they’re simply because the organism doesn’t want the reinforcer immediately after getting one all the way to the organism has been programmed to not expect another reinforcer right after getting the first one. However, I wanted to look for research on the topic.

Unfortunately, I wasn’t hit with a lot of reasons. It makes sense, seeing as there’s really no way to know if we’re accurate on our assumptions of why, especially if the organism doesn’t have the power of speech.

One thing I did find that sort-of explains it is that the post-reinforcement pause varies more as the amount of reinforcer varies and is more stable when only the interval changes. At least, that’s how it works when the reinforcer is cocaine. This leads me to believe that after getting a reinforcer, the post-reinforcement pause is in part related to how badly the organism wants seconds or thirds. If the organism is started to 90% of it’s free-feeding weight, perhaps the post-reinforcement pause would be a lot shorter than a rat that was neither starved nor satiated. I didn’t find any studies on this, but it sounds like something that would be interesting to do research on. It also might help explain why post-reinforcement pauses in fixed-interval and fixed-ratio situations increase over the duration of the experiment. The organism learns when to expect something and just waits around for the timing to be right to receive a the reinforcement. The same concept was also applied to people’s grocery-shopping behaviors. Those who bought more shopped less frequently. Obvious answer: because the food they bought lasted longer and continued to be reinforcing for longer. Kindof a “well duh” moment for science, but it makes a valid point that post-reinforcement pauses and reinforcement schedules can be applied to a variety of situations. From that and the cocained-up rat study, I’ve determined that perhaps the PRP is a function of expectations and the duration of the effects of the reinforcer. I’ve also determined that getting paid to creep on shoppers and inject rats with cocaine isn’t such a bad gig.

Terms: post-reinforcement pause, reinforcer, organism, fixed interval, fixed-ratio.

Sites:

http://www.ncbi.nlm.nih.gov/pmc/articles/PMC1334108/pdf/jeabehav00123-0113.pdf

http://opensiuc.lib.siu.edu/cgi/viewcontent.cgi?article=1118&context=tpr

http://www.ncbi.nlm.nih.gov/pmc/articles/PMC1333805/pdf/jeabehav00137-0095.pdf

I chose to further research intermittent reinforcement because it was really interesting to me that intermittent reinforcement is eventually more effective and more desirable than continuous reinforcement. I was also curious to know how intermittent reinforcement didn’t lead to extinction.

Intermittent reinforcement is when reinforcement happens every so often for a behavior. If a behavior is reinforced every time then the reinforcement can lead to satiation. For example, if a rat is reinforced with a food pellet every time it presses a lever, the pellet with lose it’s value and not be perceived as desirable anymore. Eventually, if the rat is reinforced every time it presses down the lever, the food will become undesirable/aversive (satiation). However, if the rat is only reinforced occasionally for pressing the level then the rat will begin to press the lever even more, increasing the frequency of the behavior. Intermittent reinforcement is resistant to extinction. Extinction is the process of eliminating a behavior. Intermittent reinforcement makes extinction harder to accomplish because the organism adapts to periods of receiving no reinforcement. Intermittent reinforcement can be related to parenting when dealing with a child who throws a tantrum. For example, if a child begins throwing a fit in a store because he/she wants a candy bar and the parent ignores the child for five minutes until they can’t take it anymore and finally give into the child by allowing the child to get a candy bar. The previous example is intermittent reinforcement because the parent ignores the child for throwing a fit for the first few minutes but eventually gives into the child’s want of the candy bar. If the parent takes the child into the store again and reinforces the child after eight minutes of throwing the tantrum, and the next time reinforces the child after thirteen minutes of a tantrum, the tantrum behavior will become more frequent and will be harder to extinguish. Apparently the best thing to do when a child is throwing a fit in the store is to remove the child from the store. I then found a video that provided an example of intermittent reinforcement. The man in the video asks for a glass of water and thanks the lady. The lady uses intermittent reinforcement because she only occasionally reinforces the mans polite behavior by giving him a piece of candy. The man increases the polite behavior in hopes of being reinforced.

http://www.sosuave.com/quick/tip210.htm

http://www.psywww.com/intropsych/ch05_conditioning/intermittent_reinforcement.html

http://www.youtube.com/watch?v=JgMQJro09gA

For this blog I decided to focus my attention and research on post-reinforcement pause. Post-reinforcment pause takes place when using fixed schedules of reinforcement resulting in a staircase like pattern of response as opposed to a straight sloping pattern seen when using variable schedules. This phenomena of a post-reinforcement pause is the reason variable schedules tend to be more efficient overall.

This make fixed schedules (FR and FI) less consistant. The participant will respond up to the point the reinforcer is delivered and then take a momentary pause from responding. since the reinforcer is fixed they know how many times they must emit a response for the reinforcer to be delivered over time. During my research I discovered some reasons why post-reinforcement pause may occur, I found that in some cases it is perhaps due to fatigue, satiation, or procrastination. Because the subject has just recieved a reinforcer perhaps it is full (satiated)!? Or maybe it is just taking a break because it is tired? Or the fact that it knows how many times it needs to emit a response in order to recieve the reinforcement so it it procrastinates until it is ready to emit the responses again. There are so many explainations to why post-reinforcement pause occurs that it is hard to just pinpoint one. The only way to eliminate the post-reinforcement pause is to avoid using fixed schedules of reinforcement and to use variable schedules... keep them guessing and they'll consistantly emit the behavior/response you desire.

Terms: Post-reinforcement pause, fixed schedules of reinforcement (FI and FR), variable schedules, emit, reinforcement, and satiation.

http://www.youtube.com/watch?v=8Hd2rd1elOY

http://employees.csbsju.edu/ltennison/PSYC320/schedules.htm

http://en.wikipedia.org/wiki/Reinforcement

Ratio strain refers to a problem that may occur when using ratio schedules of reinforcement, however, involves the potential for behaviors to cease to be emitted due to quick increases in the amount of responses required to be emitted prior to attaining reinforcement. This description is far to complex when first read. I was very intimidated by this term at first. I am going to attempt to simplify this term and put it into a more understandable matter.

To break it down, a ratio strain occurs when ratio schedules are used. A ratio schedule is when a certain number of responses are needed in order to be reinforced. For example, when you have to pull the string on a lawnmower a few times in order for it to start. The ratio strain might refer to the lawnmower completely breaking down. Now no matter how many times to pull the string, it won't start.

In my opinion, after reading more about ratio strain, it kind of relates to extinction burst and post reinforcement pause. For example, it always takes your car a few times before it starts. Eventually the car breaksdown (like the lawnmower) and won't even turnover. The ratio schedule was the amount of times it took the car to turn on, the ratio strain was the car breaking down. The car turning on was the continuous reinforcer whereas the car breaking down was the extinction. In this situation, the owner of the car may become angry and hit the steering wheel, known as an extinction burst.

As previously stated, it is also related to postreinforcement pause. To implement this term in the car example, the amount of time the owner of the car took before turning the key again is the post reinforcement pause.

Terms: ratio strain, ratio schedule, continuous reinforcement, extinction, extinction burst, post reinforcement pause.

http://www.scienceofbehavior.com/lms/mod/glossary/view.phpid=408&mode=letter&hook=R&sortkey=&sortorder=&fullsearch=0&page=2

http://en.wikipedia.org/wiki/Reinforcement

http://www.betabunny.com/behaviorism/Conditioning2.htm

I tried to think of something in my daily life that involves reinforcement schedules. I think this is one of those questions where the answer is so easy it's hard to see. Once you get going, lots of activities involve reinforcement schedules: commercial-watching behavior is reinforced on a variable ratio schedule, because not every commercial is pleasurable (some are rather aversive), but for me, a funny commercial is reinforcing enough to keep me from muting the tv during commercials, especially in February.

I realized today that a hobby of my own involves a VR reinforcement schedule: video games. Here is a youtube video explaining how game developers nowadays employ behaviorism in game development to make games more addicting (the sound cuts out after the first three minutes, but it explains the point really well in the first two):

http://www.youtube.com/watch?v=RIqQOFXndJ4

In the era of online games, getting people to buy the game is no longer enough: online gaming is sold on a subscription basis. People (myself included) pay $60/year for an xbox live subscription. The antecedent of this online game-playing behavior could be boredom or social pressure from peers to subscribe to online services for group play. People commonly form "clans", which are groups of people who get together and play video games. The behavior is the online game play itself, and the consequence is reinforcement in the form of achievements, a sense of pride in one's abilities (being able to "pwn noobs"), and social acceptance if one is a member of a gaming "clan". All of these consequences are reinforcers, because they lead to more online game-playing behavior (and renewed subscriptions, which put money in Microsoft's pockets)

The first reinforcer listed here is not necessarily dependent on winning. In games like Call of Duty, players can earn achievements with individual weapons without ever winning a game. Sometimes the achievement is attained without even trying. For example, there is usually an achievement for dying in some spectacular way like falling off of a building. This is clever, because it reinforces the game playing behavior (and therefore keeps the gamer playing) when a player is likely to begin to get frustrated (falling off of a building on accident is often frustrating).

The other two reinforcers are dependent on winning. Because almost no one can win every time, winning and the pride and social acceptance that come with it are on a variable ratio reinforcement schedule.

Here's a link to the two articles discussed in the video. The first is the gamasutra article, which explains video games in behavioral terms to game designers who didn't take bmod classes. The second is the cracked.com article which is definitely worth a read.

http://www.gamasutra.com/view/feature/3085/behavioral_game_design.php?page=1

http://www.cracked.com/article_18461_5-creepy-ways-video-games-are-trying-to-get-you-addicted.html

The cracked article is especially interesting because it goes further than acknowledging that video games use reinforcement schedules to hook gamers. The tone of the article suggests that the authors believe behavioral methods to be unethical. One particular example of a brilliant variation of a VR reinforcement schedule is a Chinese MMO (massively multiplayer online) game that has a continuous side-quest involving opening chests. *Not every chest has a virtual prize inside*, which distinguishes this as a VR reinforcement schedule (no pun intended, but I thought it was pretty funny all the same). Each chest requires a key, and the keys can only be obtained by paying real money to the game developers. On top of all of this, as an added reinforcer, there is a special virtual prize awarded to the person who opens the most chests in a day. The result is a bunch of people sitting in front of their computer screens clicking their mouses thousands upon thousands of times in an evening, thinking that this could be the night, only to be let down. They dry their tears and decide that tomorrow feels luckier. It's a reinforcement schedule inside of another reinforcement schedule.

It's all very behavioral, but is it ethical? I'm not sure. I'd highly recommend the cracked article though.

Terms: reinforcement schedules, variable ratio/VR, reinforced, reinforcement, reinforcer, reinforcing, antecedent, behavior, consequence

Links:

http://www.youtube.com/watch?v=RIqQOFXndJ4

http://www.gamasutra.com/view/feature/3085/behavioral_game_design.php?page=1

http://www.cracked.com/article_18461_5-creepy-ways-video-games-are-trying-to-get-you-addicted.html (This is the best one!)

I'm not big into playing video games, but this out look on what we've read and the links you posted were very interesting. Thanks for going beyond the more common links to help provide understanding for the topics we have covered. I'll have to inform my little brother how little control he has! He won't be a fan of that!

I decided to explore the differences between fixed/variable ratio/interval to better understand the terms for my benefit.

Variable interval schedule is a schedule of reinforcement where a response is reinforced after an unpredictable amount of time has passed. Variable interval schedule of reinforcement is resistant to extinction. An example of this would be a parent attending to their child when it cries.

Variable ratio schedule is a schedule of reinforcement where a response is reinforced after an unpredictable number of responses. Gambling is a good example of a variable ratio schedule.

Fixed interval schedule is where the first response is rewarded only after a specified amount of time has elapsed. A good example of a fixed interval schedule is an allowance of twenty dollars every week. Does a paycheck qualify as a fixed interval schedule if every paycheck is not the same amount of money? Can the reinforcement be different but it has to just happen at the same time each time?

Fixed-ratio schedule is a schedule of reinforcement where a response is reinforced only after a specified number of responses. This schedule produces a high, steady rate of responding with a post-reinforcement pause. An example of this would be like my job I had a button factory where I would get paid after a certain number of buttons were done.

http://www.psychology.uiowa.edu/faculty/wasserman/glossary/schedules.html

http://psychology.about.com/od/behavioralpsychology/a/schedules.htm

http://allpsych.com/psychology101/reinforcement.html

Schedules of reinforcement are easy to see when you are trying to modify someone elses or your own behavior. But when we do things, like pick random videos to watch and pick out the ABC's and then try to figure out what schedule or reinforcement is used, it is a lot harder. I kept trying to think of real life senerios for Fixed Ratio, Fixed Interval, Variable Ratio, and Variable interval, but could only come up with examples that were in the reading or we had discussed in class. After some pondering I think I found a few clips from shows I've watched to help me remeber how these schedules work.

The first clip is from Lost. In the show there is a clock that counts down from 108 minutes to zero. WHen it gets closer to zero an alarm starts beeping to warm someone time is almost up. The alarm sounding every 108 minutes is teh antecedent in this situation. once the alarm sounds, someone is required to enter a specific set of numbers into a computer (this constitutes the behavior). Once these numbers have been entered correctly the beeping stops and it is said that the world will not end, or some disaster will not occur(the consequence). The consequence in this procedure contains a reinforcement which is set to a Fixed INterval, because it is a non changing amount of time between when the reinforcement is recieved. The reinforcement being staying alive. We know FI will have a considerable post-reinforcement pause between responses, because the amount of time between required responses is set and we know how long we have. Now where the characters are in regards to location to the computer they have to enter numbers into will change. They may venture out into the jungle right after pushing the buttons, but the closer it gets to that time, the more they will stick aroudn the computer. This is similar to the response rate increasing gradually and being more when the time is near.

In supernatural shows there are often warewolves. Warewolves supposedly change on the full moon. We know that the full moon can be seen ever 27 days. Since we can count the days as numbers this even could be labled as Fixed Ratio, but since days also tell time and the time the moon rises might change, but will be around an average time, is it possible we could consider this a Variable interval too? If a full moon changing someone into a warewolf is the antecedent, harming (eating, biting) species is the behavior, than the consequence would be probably be a punishment. But If a warewolf doens't want to harm anyone they may take responsibily by locking themselves up on nights they know they are going to turn. CHanging the ABC's. Knowing the full moon is changing them into a warewolf would be the antecent, the behavior would be locking themself in a cage/dungeon, and the consequence would be a reinforcement if no one was harmed. The response of there being a full moon is to lock oneself up. The response rate for this would be moderate to steady. Since you are not quite sure the specific time the moon will rise, or start to change you there is likely to a small if any post-reinforcement pause. If you wish is to not hurt people, you will probably make sure to take your precausions as early as possible. This variance of time will make it more likely that you will not miss being locked up in time. You wish and ability to not hurt others will make it less likely that extinction will occur.

http://www.youtube.com/watch?v=m8O_pd_sUhU

http://www.youtube.com/watch?v=SZEx3HxKSnM

http://en.wikipedia.org/wiki/Schedules_of_reinforcement#Schedules_of_reinforcement

My topic of choice is fixed ratio. I think this can be applied to many things in everyday life. For example I have to call my mom twice before she will answer the phone while she is at work. If I have to call exactly two times each and every time, it is then considered a fixed ratio. After we realize that a behavior is a fixed ratio, we can then determine when to elict our desired behavior. This remains constant as long as we are continually getting reinforced. If I were to emit the behavior of calling my mom and she started answering sometimes on the first call and sometimes on the fourth call, but with an average of every two, it would then be considered a variable response. Let's say I need to call my mom every hour to give her an update on how babysitting my brother is going. The period in which I am not emitting the calling behavior would be considered the post-reinforcement pause. I know that she won't pick up until I call withing one hour and two calls, so I don't bother emitting the behavior because I know that I will not be reinforced.

The ABCs for my behavior would be

A- needing to get a hold of my mom

B- Calling her twice

C- her answering and talking to be on the phone

Though I applied this reinforcement to my mom, it has also had a lot of experimenting with animals. For example if I want to train my dog to let itself outside by ringing a bell three times, I would only elict the behavior of letting him outside if he emitted the behavior of three rings. If he only rang the bell once, I would not reinforce him and he would eventually learn the fixed amount of times necessary to ring the bell to go potty.

http://www.youtube.com/watch?v=kFf6aetdCCY

http://www.youtube.com/watch?v=8Hd2rd1elOY

http://www.youtube.com/watch?v=rst7dIQ4hL8&feature=related

Well we only read one section, so 2.5 I liked the most. Something I liked about it would be learning that there are so many different ways to schedule reinforcement. I previously thought of reinforcement as something you would perform directly after a behavior was emitted, but this section shows how many different instances and varied ways it can be used.

There wasn't a section of it that I liked the least but a lot of it seems like it can run together pretty easily. I feel that it may be a struggle to keep FR, FI, VR, and VI apart from one another and to know when it is best to use each one.

The most useful information would be the point that continuous reinforcement schedules are important to initially get a behavior under stimulus control. This pretty much means that you are able to continue to emit a behavior without getting reinforced each and every time. This then leads into the different types of reinforcement schedules we emit in order to elict the desired stimulus response.

Prior to reading this section, I was unaware of the different types of reinforcement that could be applied to altering behaviors and how to determine when the elicted responses will occur.

Three things I will remember are:

1. Ratio refers to the number of behaviors emitted while interval refers to the amount of time passing between each reinforcement.

2. Fixed refers to a set amount of behaviors (ratio) or a set amount of time (interval) while variable refers to an average amount (it will often include a range of

acceptable values).

3. Different reinforcement schedules will elicit different pattern of responding.

Reading this section has taught me that if you vary the type to reinforcement you emit, you will elect different rates in which behaviors will occur.

I would like to go over ratio strain and biological continuity further in class.

For my topical post I wanted to expand on and explore intermittent reinforcement. intermittent reinforcement occurs at different intervals to get the desired behavior. As a future teacher I wanted to explore this more to understand this so i will be more of a useful tool. Teachers struggle in their classroom many times because of students being disruptive and having to punish them. Many times the teacher has to stop the class to discipline students, which takes away from the teachers classroom flow. I wanted to get a better understanding of this concept because I feel this is the best way to use reinforcement in the classroom.

There are four different kinds of intermittent reinforcement: fixed ratio, variable ratio, fixed interval, and variable interval. All four are needed to keep variation in a classroom and because not all of them can be used appropriately in all situations. Fixed ratio for example can be used when a student gets an A on a test you allow them to have extra recess time. Students will want to achieve this goal so they may begin to study more and try harder on tests. The student continues to gain from getting a particular grade on tests. Variable ratio would be if a student is talking in class the teacher would change up when she punishes him. The teacher may allow him to talk to 3 people in class one time before they discipline him and the next only allow it once. With this type of reinforcement the student is not sure when the reinforcement will come. Most likely because they don’t know when they will get the reinforcement the talking will stop. With a fixed interval students may be reinforced to know that fire alarm drills will occur at a particular time. Because of this the students will always be aware when the drill is coming and will be prepared for it to happen. With variable interval in the same situation the fire drill would occur at different times. This would not allow for students to get into to much of a rutine. This would test the students on if they were prepared to the fire drill in different situations. From the extra readings I have done I have been able to gain a better understanding of what situations each of these could be used in to get the most of the reinforcement. I feel as if I continue to strengthen my understand of Bmod as well as take what im learning and use it for my future job.

http://www.outofthefog.net/CommonNonBehaviors/IntermittentReinforcement.html

http://www.media-cn.com/intermittent-reinforcement.html

http://www.psywww.com/intropsych/ch05_conditioning/intermittent_reinforcement.html

http://www.kotancode.com/2010/12/30/dopamine-squirts-intermittent-reinforcement-and-mobile-apps/

For this topical blog I am choosing to do the four different scales of reinforcement. The scales are: fixed ratio, fixed interval, variable interval, and variable ratio. I wanted to look further into these because I think that they are interesting and will have a lot to research:) A fixed ratio is a specific set amount of times an action/behavior will occur. A fixed interval is a specific amount of times the behavior will occur. A variable ratio usually have a set minimum and maximum the behavior will occur, it is not always at the same specific amount of time. The ratio implies the number of times/schedule that the behavior will occur. A variable interval is described as the amount of time something happens (almost as if at random) within an interval of time (specific amount of time).

The websites I found further explained these concepts and made them more clear in my head. One website stated that a variable ratio schedule is much like a slot machine, golf, or a door to door salesman. This website also gave these examples for variable interval schedule:parent attending the cries of their child, checking your voicemail, and fishing. Some examples of fixed interval schedule would be: baking a cake, and waiting for the bus. An example for fixed ratio schedule would be a job that pays based on units delivered. This particular website also gave examples of schedules of reinforcement in the video game world:)

The other website I found related Skinner work with rats and conditioning to the technology world, it's definately worth looking at! The blogger suggest that recieving an email is much like a variable schedule of reinforcement because "Most of it is junk and the equivalent to pulling the lever and getting nothing in return, but every so often we receive a message that we really want. Maybe it contains good news about a job, a bit of gossip, a note from someone we haven’t heard from in a long time, or some important piece of information. We are so happy to receive the unexpected e-mail (pellet) that we become addicted to checking, hoping for more such surprises. We just keep pressing that lever, over and over again, until we get our reward."

The last website is relevant but slightly out of context. It describes fixed reinforcement to the guitar playing world:)

http://shetoldme.com/Business/Guitar-Building-Fixed-Neck-Reinforcement-The-Facts

http://danariely.com/2010/08/23/back-to-school-1/

http://www.betabunny.com/behaviorism/VRS.htm

I chose to do my research on continuous reinforcement. With continuous reinforcement the desired behavior is reinforced every time it occurs, which interests me because it create a way for the desired behavior to be learned quickly with the response occurring every time. For an example I looked up potty training and continuous reinforcement, the mom told her son every time he use the big boy potty he got a spongebob sticker, which is his favorite cartoon, so he was excited to use the potty to get a sticker each time he used the potty. He associated using the big boy potty and getting a prize together, even though later in life he will have to learn to use the potty without the reinforcement of a sticker, it’s a good way to display continuous reinforcement.

http://psychology.about.com/od/behavioralpsychology/a/schedules.htm

http://goanimate.com/movie/0RrQ8AI9RYGs